Robustness: Making Progress by Poking Holes in Artificial Intelligence Models

Findings from two published studies could lead to enhancements in artificial intelligence (AI) models by focusing on their flaws.

One paper found that adding visual attributes to text in multimodal models could boost performance and usefulness for humans.

Another study determined that few-shot learning (FSL) models lack robustness against adversarial treatments and need improvements.

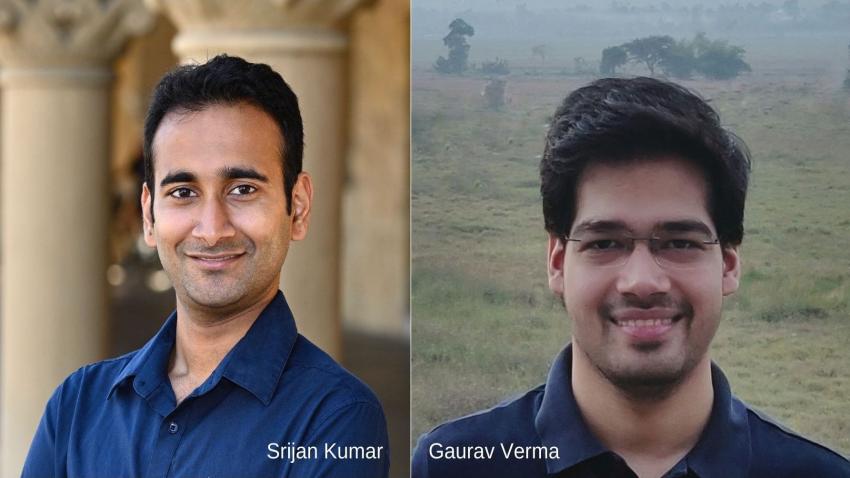

Georgia Tech Assistant Professor Srijan Kumar and Ph.D. student Gaurav Verma lead the research being presented at the upcoming 61st Annual meeting of the Association for Computational Linguistics (ACL 2023).

Co-authors from Georgia Tech joining Kumar and Verma include Shivaen Ramshetty and Venkata Prabhakara Sarath Nookala, as well as Subhabrata Mukherjee, a principal researcher at Microsoft Research.

ACL 2023 brings together experts from around the world to discuss topics in natural language processing (NLP) and AI research. Kumar’s group offers to those discussions their work that focuses on robustness in AI models.

“Security of AI models is paramount. Development of reliable and responsible AI models are important discussion topics at the national and international levels,” Kumar said. “As Large Language Models become part of the backbone of many products and tools with which users will interact, it is important to understand when, how, and why these AI models will fail.”

[MICROSITE: Georgia Tech at ACL 2023]

Robustness refers to the degree to which an AI model’s performance changes when using new data versus training data. To ensure that a model performs reliably, it is critical to understand its robustness.

Trust is of essential value within robustness, both for researchers that work in AI and consumers that use it.

People lose trust in AI technology when models perform unpredictably. This issue is relevant in the ongoing societal discussion about AI security. Investigating robustness can prevent, or at least highlight, performance issues arising from unmodeled behavior and malicious attacks.

Deep Learning for Every Kind of Media

One aspect of AI robustness Kumar’s group will present at ACL 2023 delves into multimodal deep learning. Using this method, AI models receive and apply data through modes ranging from text, images, video, and audio.

The group’s paper presents a way to evaluate multimodal learning robustness called Cross-Modal Attribute Insertions (XMAI).

XMAI found that multimodal models perform poorly in text-to-image retrieval tasks. For example, adding more descriptive wording in search text for an image, like from “girl on a chair” to “little girl on a wooden chair,” caused the correct image to be retrieved at a lower rank.

Kumar’s group determined this when XMAI outperformed five other benchmarks in two different task retrieval tests.

“By conducting experiments in a sandbox setting to identify the plausible realistic inputs that make multimodal models fail, we can estimate various dimensions of a model’s robustness,” said Kumar. “Once these shortcomings are identified, these models can be updated and made more robust.”

Labels Matter When It Comes to Adversarial Robustness

Prompt-based few-shot learning (FSL) is another class of AI models that, like multimodal learning, uses text as input.

While FSL is a useful framework for AI to improve task performance when labeled data is limited, Kumar’s group points out in their ACL findings paper that there is limited understanding of the methods’ adversarial robustness.

“Our findings shine a light on a significant vulnerability in FSL models – a marked lack of adversarial robustness,” Verma explained. “This indicates a non-trivial balancing act between accuracy and adversarial robustness of prompt-based few-shot learning for NLP.”

Kumar’s team ran tests on six GLUE benchmark tasks, comparing FSL models with fully fine-tuned models. Here, they found a notable, greater drop in task performance of FSL models treated with adversarial perturbations than that of fully fine-tuned models.

In the same study, Kumar’s group found and proposed a few ways to improve FSL robustness.

These include using unlabeled data for prompt-based FSLs and expanding to an ensemble of models trained with different prompts. The group also demonstrated that increasing the number of few-shot examples and model size led to increased adversarial robustness of FSL methods.

“Improved adversarial robustness of few-shot learning models is essential for their broader application and adoption,” Verma said. “By securing a balance between robustness and accuracy, all from a handful of labeled instances, we can potentially implement these models in safety-critical domains.”

As computing revolutionizes research in science and engineering disciplines and drives industry innovation, Georgia Tech leads the way, ranking as a top-tier destination for undergraduate computer science (CS) education. Read more about the college's commitment:… https://t.co/9e5udNwuuD pic.twitter.com/MZ6KU9gpF3

— Georgia Tech Computing (@gtcomputing) September 24, 2024