New Large-Language Model Can Protect Social Media Users' Privacy

Social media users may need to think twice before hitting that “Post” button.

A new large-language model (LLM) developed by Georgia Tech researchers can help them filter content that could risk their privacy and offer alternative phrasing that keeps the context of their posts intact.

According to a new paper that will be presented at the 2024 Association for Computing Linguistics (ACL) conference, social media users should tread carefully about the information they self-disclose in their posts.

Many people use social media to express their feelings about their experiences without realizing the risks to their privacy. For example, a person revealing their gender identity or sexual orientation may be subject to doxing and harassment from outside parties.

Others want to express their opinions without their employers or families knowing.

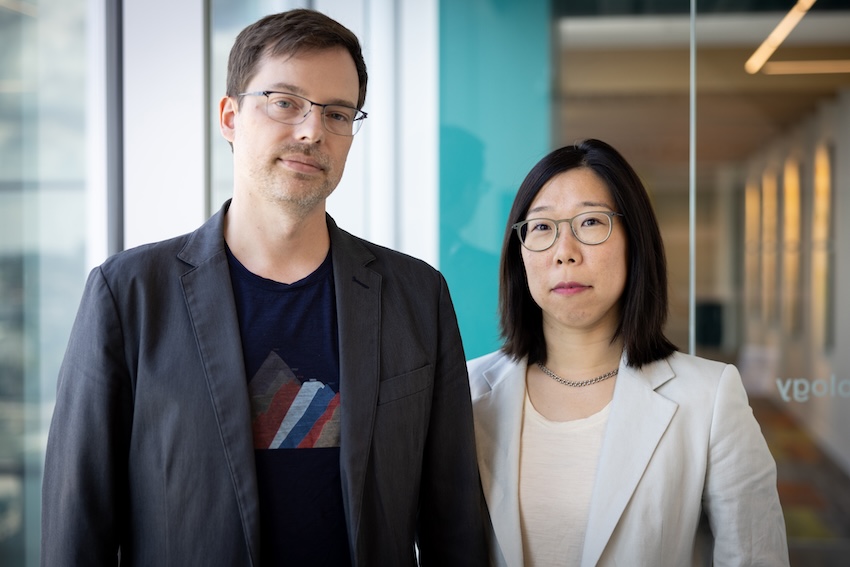

Ph.D. student Yao Dou and associate professors Alan Ritter and Wei Xu originally set out to study user awareness of self-disclosure privacy risks on Reddit. Working with anonymous users, they created an LLM to detect at-risk content.

While the study boosted user awareness of the personal information they revealed, many called for an intervention. They asked the researchers for assistance to rewrite their posts so they didn’t have to be concerned about privacy.

The researchers revamped the model to suggest alternative phrases that reduce the risk of privacy invasion.

One user disclosed, “I’m 16F I think I want to be a bi M.” The new tool offered alternative phrases such as:

- “I am exploring my sexual identity.”

- “I have a desire to explore new options.”

- “I am attracted to the idea of exploring different gender identities.”

Dou said the challenge is making sure the model provides suggestions that don’t change or distort the desired context of the post.

“That’s why instead of providing one suggestion, we provide three suggestions that are different from each other, and we allow the user to choose which one they want,” Dou said. “In some cases, the discourse information is important to the post, and in that case, they can choose what to abstract.”

WEIGHING THE RISKS

The researchers sampled 10,000 Reddit posts from a pool of 4 million that met their search criteria. They annotated those posts and created 19 categories of self-disclosures, including age, sexual orientation, gender, race or nationality, and location.

From there, they worked with Reddit users to test the effectiveness and accuracy of their model, with 82% giving positive feedback.

However, a contingent thought the model was “oversensitive,” highlighting content they did not believe posed a risk.

Ultimately, the researchers say users must decide what they will post.

“It’s a personal decision,” Ritter said. “People need to look at this and think about what they’re writing and decide between this tradeoff of what benefits they are getting from sharing information versus what privacy risks are associated with that.”

Xu acknowledged that future work on the project should include a metric that gives users a better idea of what types of content are more at risk than others.

“It’s kind of the way passwords work,” she said. “Years ago, they never told you your password strength, and now there’s a bar telling you how good your password is. Then you realize you need to add a special character and capitalize some letters, and that’s become a standard. This is telling the public how they can protect themselves. The risk isn’t zero, but it helps them think about it.”

WHAT ARE THE CONSEQUENCES?

While doxing and harassment are the most likely consequences of posting sensitive personal information, especially for those who belong to minority groups, the researchers say users have other privacy concerns.

Users should know that when they draft posts on a site, their input can be extracted by the site’s application programming interface (API). If that site has a data breach, a user’s personal information could fall into unwanted hands.

“I think we should have a path toward having everything work locally on the user’s computer, so it doesn’t rely on any external APIs to send this data off their local machine,” Ritter said.

Ritter added that users could also be targets of popular scams like phishing without ever knowing it.

“People trying targeted phishing attacks can learn personal information about people online that might help them craft more customized attacks that could make users vulnerable,” he said.

The safest way to avoid a breach of privacy is to stay off social media. But Xu said that’s impractical as there are resources and support these sites can provide that users may not get from anywhere else.

“We want people who may be afraid of social media to use it and feel safe when they post,” she said. “Maybe the best way to get an answer to a question is to ask online, but some people don’t feel comfortable doing that, so a tool like this can make them more comfortable sharing without much risk.”

For more information about Georgia Tech research at ACL, please visit https://sites.gatech.edu/research/acl-2024/.

As computing revolutionizes research in science and engineering disciplines and drives industry innovation, Georgia Tech leads the way, ranking as a top-tier destination for undergraduate computer science (CS) education. Read more about the college's commitment:… https://t.co/9e5udNwuuD pic.twitter.com/MZ6KU9gpF3

— Georgia Tech Computing (@gtcomputing) September 24, 2024