Computer Scientists Build, Test, and Present Model to Curb Online Ban Evasion

Ban evasion is a problem for users, moderators, and platforms alike. Evaders torment users on popular websites and get banned by moderators, only to return under a new account to continue their malicious behavior.

Students and faculty at Georgia Tech’s College of Computing published a study that can help online moderators stop ban evasion in its tracks. Claiming to be the first data-driven study on ban evasion behavior, the research team developed a new model that proved it can predict and detect ban evasion.

Not only does the model keep the internet an enjoyable environment for users, it makes the world a safer place. Along with harassment and spread of terroristic propaganda, some real-world acts of mass violence are linked to ban evasion according to the study.

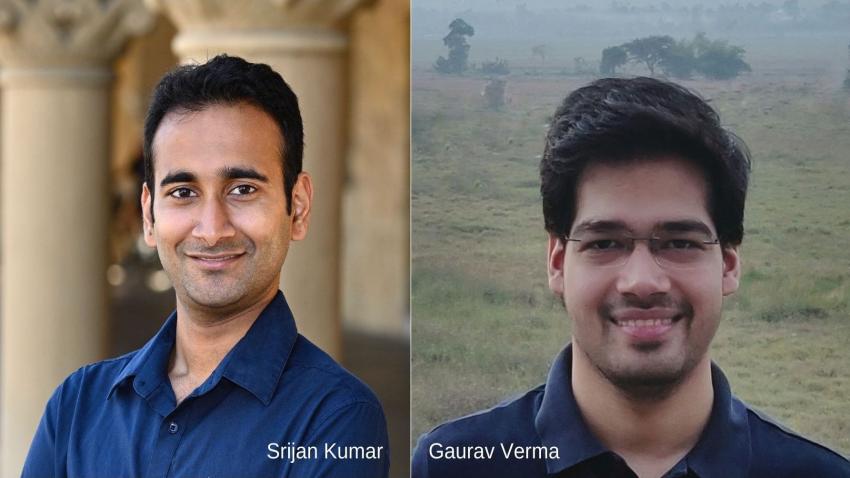

“Ban evasion is arguably one of the biggest threats to web safety and integrity,” said Srijan Kumar, assistant professor at the School of Computational Science and Engineering (CSE) and co-author of the study. “It is incredibly trivial for malicious actors to create a new account to continue their malicious activities after their original account is banned. We wanted to understand how ban evaders behave and how to detect and prevent it.”

[RELATED: Work Limiting Internet Fraud Lands Assistant Professor on Prestigious Forbes List]

Along with Kumar, contributing researchers of the study from the College includes Gaurav Verma, a Ph.D. student at the School of CSE, and Manoj Niverthi, an undergraduate student majoring in computer science.

Kumar, Verma, and Niverthi teamed with Wikipedia to attain data, study evader behavior, and test their model. Its application could be used where ban evasion occurs such as popular social media platforms, eBay, Khan Academy, and Twitch.

Although moderators currently use manual and automatic algorithms to identify malicious activity, they often encounter difficulty predicting and detecting evaders following bans. No tool exists to help automate ban evasion detection and prediction.

The team’s model recorded a high accuracy rate in predicting likelihood whether malicious “parent” accounts will evade bans in the future using data such as account creation timing, linguistics, and edit history.

The study showed the model can correctly detect “child” accounts soon after creation. This is useful for moderators to automatically monitor their platforms from ban evaders.

The same model also yielded high success rates of discerning ban evaders from malicious non-evaders.

Kumar, Niverthi, and Verma have made the ban evasion dataset available to the community to aid future research and develop additional tools for Wikipedia moderators.

The team will also present findings of their study virtually at The Web Conference, April 25-29. Formerly WWW Conference, The Web Conference is the premier yearly international conference on the topic of future directions of the World Wide Web.

This will be Niverthi’s first ever paper presentation and Verma’s first paper presentation as a Ph.D. student.

“Our work opens new avenues to be proactive to smart ban evaders and malicious actors, rather than being reactive,” said Kumar. “I am incredibly proud of both Gaurav and Manoj who co-led the work.”

As computing revolutionizes research in science and engineering disciplines and drives industry innovation, Georgia Tech leads the way, ranking as a top-tier destination for undergraduate computer science (CS) education. Read more about the college's commitment:… https://t.co/9e5udNwuuD pic.twitter.com/MZ6KU9gpF3

— Georgia Tech Computing (@gtcomputing) September 24, 2024