Gordon Bell Finalist Uses Supercomputing to Connect the Dots

Information overload is all too common in the modern world, particularly in academia. With millions of articles and academic papers, connecting concepts throughout bodies of work is no simple task.

However, a breakthrough created by researchers from Georgia Tech and Oak Ridge National Laboratory (ORNL) presents a way to link the millions upon millions of data points found throughout volumes of information.

The paper outlining this breakthrough method has been nominated for the 2020 Gordon Bell Prize, which awards outstanding achievements in high-performance (HPC) computing with an emphasis on applying HPC to applications across science, engineering, and large-scale data analytics.

Co-authored by School of Computational Science and Engineering (CSE) Master’s degree student Vijay Thakkar and Professor Rich Vuduc and ORNL researchers Piyush Sao, Hao Lu, Drahomira Herrmannova, Robert Patton, Thomas Patok, Ramakrishnan Kannan this work presents a novel data mining approach to analyze large corpora of scholarly publications with HPC to discover how concepts relate to one another.

“In order to keep pushing the exponential progress of science, we need some level of analysis of different research papers so we can synthesize that into digestible information,” said Thakkar, who currently works in the HPC Garage research lab.

Thakkar joined the HPC Garage, led by Vuduc, one year ago when pursuing research in sparse linear algebra – Vuduc’s area of expertise. However, a few weeks into the independent study, Vuduc approached Thakaar about an opportunity to work with the ORNL team as a CUDA developer. Since then, Thakkar’s research emphasis has focused on facilitating this robust project.

“To me, this project boils down to a very important issue which is that as technological progress becomes faster and faster, for each individual scientist, there is too much information out there to distill into something that is comprehensible. Making connections between different research groups gets harder and harder to do, stalling the progress science can make,” he said.

In this initial project, the team is focusing on processing the articles across PubMed as a particular case study. PubMed is a database of biomedical literature maintained by the National Library of Medicine and currently hosts approximately 18 million papers.

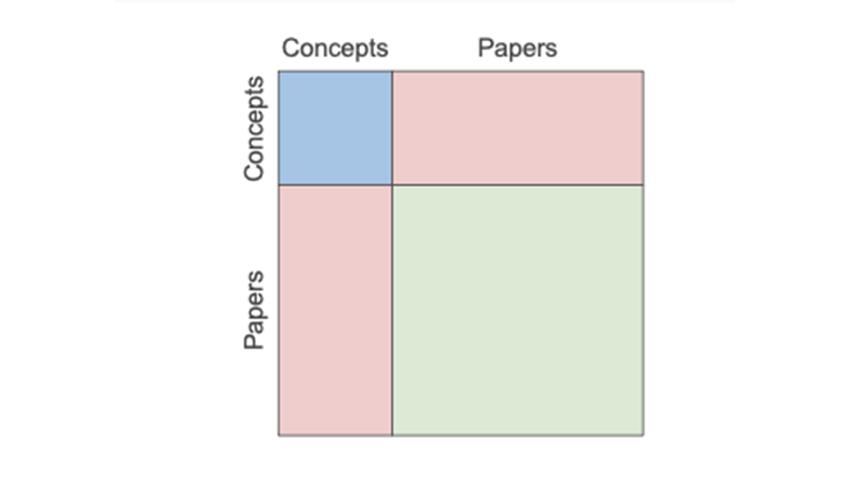

By mining key terms in the papers’ abstracts, the team is able to turn each term into a point, or vertex, in a large-scale graph.

“A term might be the name of a drug or a disease or a symptom, for instance. When two terms appear in the same paper, that means we know there is a direct relationship between them, which becomes an edge in the graph,” said Vuduc.

But just because two terms are not directly linked does not mean they are unrelated; it only means they are not known yet. To find these as-yet undiscovered connections, the idea is to look for short paths that bridge two terms.

Vuduc said, “A useful analogy might be the following: Suppose you are driving from point A to point B. Maybe you know one path to get there. But what if there is a better, shorter way? That’s what this method does. Points are these biological or medical terms or concepts, and road segments are the papers that correspond to known routes. It’s the unknown routes that are interesting.”

These paths are analyzed using a shortest path algorithm referred to as DSNAPSHOT. While shortest path algorithms are not a new concept and are fairly common, the scale at which this particular shortest path algorithm is applied is unheard of.

“If you think about it, six million papers may seem like a small number, but the size of the problem is not really six million. It’s six million times six million because in the worst-case scenario, each paper can have at least one connection to every paper in the database and that is where the complexity of the problem comes from,” said Thakkar.

However, according to Thakkar, the problem is more complex and more difficult to solve than this example – even with today’s supercomputers.

“It’s a much harder problem because you’re visiting each vertex once making this a V3 problem. At the scales we are talking about, this is 18 million times 18 million times 18 million and those orders of magnitude add up really quickly, which is why we need something like the Summit Supercomputer to crunch these numbers,” Thakkar said.

For the team’s first finalist run of 4 million papers it took 30 minutes to compute using over twenty-four thousand GPUs of the Summit machine. What’s more, the time this type of problem will require to solve will continue to grow in a cubic manner with each paper added to PubMed database.

The announcement of the Gordon Bell Prize winner will be made at the 2020 Supercomputing Conference award ceremony held, Nov. 19 at 2 p.m. EST.

As computing revolutionizes research in science and engineering disciplines and drives industry innovation, Georgia Tech leads the way, ranking as a top-tier destination for undergraduate computer science (CS) education. Read more about the college's commitment:… https://t.co/9e5udNwuuD pic.twitter.com/MZ6KU9gpF3

— Georgia Tech Computing (@gtcomputing) September 24, 2024