Georgia Tech Researchers Explore New Ways to Give Navigation Directions to Robots

Robots can navigate buildings, but how do they know where to go? While some robots can follow pre-programmed routes, or be controlled by setting waypoints on a map, these methods are inflexible and can be unnatural to use. Researchers at Georgia Tech believe the best way to give robots navigation instructions is by talking to them.

“Giving natural language instructions to a robot is a fundamental research problem on the critical path to developing more flexible domestic robots that can work with people,” said Peter Anderson, a research scientist at Georgia Tech.

In a recent paper, Georgia Tech has introduced a new way for robots to reason about navigation instructions in an unknown environment.

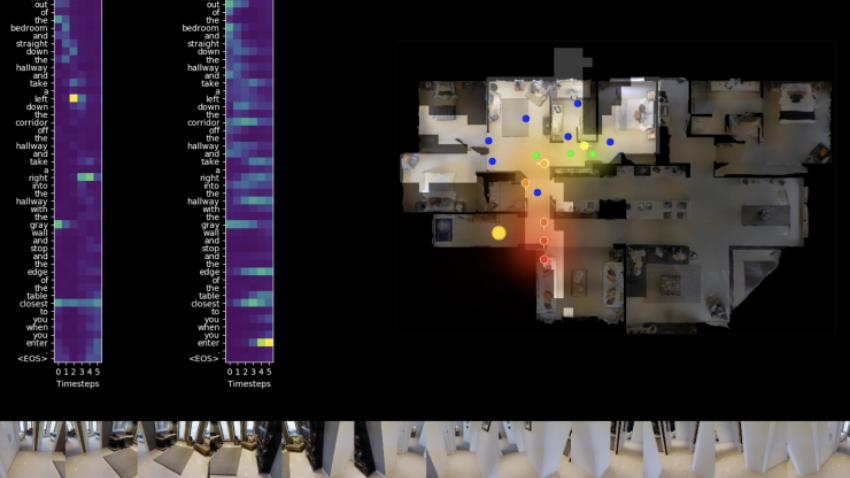

The team created a semantic map representation that updates each time the robot moves or sees something new. To reason about navigation instructions using this map, the lab found a way to leverage an algorithm used in classical robotics and apply it to artificial intelligence. The algorithm, called Bayesian state estimation, usually tracks the location of a robot from sensor measurements like lidar and wheel odometry. By manipulating the algorithm, Georgia Tech says their robots can use it model language instruction inputs instead.

The paper got its title "Chasing Ghosts: Instruction Following as Bayesian State Tracking" because rather than tracking a robot from sensor measurements, the team is tracking the likely trajectory taken by an ideal agent or human demonstrator in response to the instructions. In this approach, the sensor measurements are the instructions themselves. This algorithm allows the agent to “reason” about all the different trajectories it could take and the probability of each trajectory when completing a task. By using an explicit map, researchers are easily able to inspect the model to see where the agent thinks the goal is and where it is likely to move next.

Currently, the robots move in simulated reconstructions of buildings, and communication is through written text, though some applications and off-the-shelf speech-to-text systems could work in conjunction with the existing system, according to researchers.

“Spoken language would definitely be more natural in many situations, so we might in the future investigate models that go directly from speech to robot actions,” said Anderson.

Anderson particularly likes to think about this work in regards to telepresence robots, though it could be applied to any robot.

“Telepresence robots are a great idea, but they are not as popular as they could be. Maybe we need smarter, more natural robots that just go where you tell them to go and look at what you ask them to look at,” said Anderson.

Think about all of the time that is lost commuting to work and walking to meetings. Imagine how climate change might be positively impacted if people needed to travel less for business. Anderson hopes that this work will allow people to focus more on their meetings, conversations with people, and, perhaps, help with climate change, rather than micromanaging a robot or jetting off around the world.

This work will be presented in December at the Thirty-third Conference on Neural Information Processing Systems (NeurIPS) 2019 in Vancouver, British Columbia.

As computing revolutionizes research in science and engineering disciplines and drives industry innovation, Georgia Tech leads the way, ranking as a top-tier destination for undergraduate computer science (CS) education. Read more about the college's commitment:… https://t.co/9e5udNwuuD pic.twitter.com/MZ6KU9gpF3

— Georgia Tech Computing (@gtcomputing) September 24, 2024